The Margin Trap Myth: Why the Best Infrastructure Companies Often Start Ugly

Rethinking Gross Margins in Infrastructure and AI

Rethinking Gross Margins in Infrastructure and AI

Innovation doesn’t come cheaply. At $2M ARR, Vercel* sat at 0% gross margins—then, they soared past $8M (and later $200M) ARR with 70+% margins, without compromising on product quality. Charging ahead as an industry leader and using new technology is expensive, which can make the gross margins equation appear out of whack early on. But as underlying infrastructure costs decrease, the scales balance.

"Our early approach to gross margins was very deliberate,” Marten Abrahamsen, Vercel’s Chief Financial Officer, told us. “Our hypothesis was: solve the hardest infrastructure problems and the margins will follow. So we focused relentlessly on developer experience and product velocity, and the unit economics naturally improved as we achieved scale from $2M to $200M ARR."

Those same dynamics are playing out now across AI companies.

Over the last decade, the software industry’s growth has been fueled as much by extraordinary business models as extraordinary technical achievements. Cloud computing enabled SaaS business models that Wall Street grew to love. At its heart, SaaS is valuable because it combines low variable costs with strong retention rates and predictability. The best software companies incur very low COGS, enabling them to grow quickly and earn significant cash flows at scale. Even at the early stages startups are expected to show high gross margins (70%+) to attract funding, forcing founders to optimize from their earliest days of sales.

However, what this ignores is that many of the most successful software companies of the past 15 years started out with low to negative gross margins. Snowflake, Crowdstrike, Cloudflare and Vercel all didn’t achieve target “SaaS gross margins” until they reached many tens or even hundreds of millions in ARR. Similarly, OpenAI, Cursor, Anthropic*, Wiz and many others are still on a journey to reach these targets. Anthropic is getting closer, recently achieving 60% gross margin on their API business per The Information’s reporting.

In infrastructure software, where we invest, we believe that overoptimizing for margins early can be a mistake. Particularly in serverless infrastructure and AI, many of the best opportunities start out with low or negative gross margins—so much so that in certain situations we view high revenue growth with low gross margins as a positive signal for early-stage startups. Today, this pattern is accelerating across AI, but with one key difference: the input cost decline is happening faster and more predictably than we've ever seen.

Many of today’s AI “killer apps” consume an enormous number of LLMs tokens to enable a delightful customer experience. Everyone from the codegen players to AI legal providers to prompt-to-app builders has been labeled as negative-margin wrappers who only create value for the LLM providers, echoing the same critique Vercel heard early on.

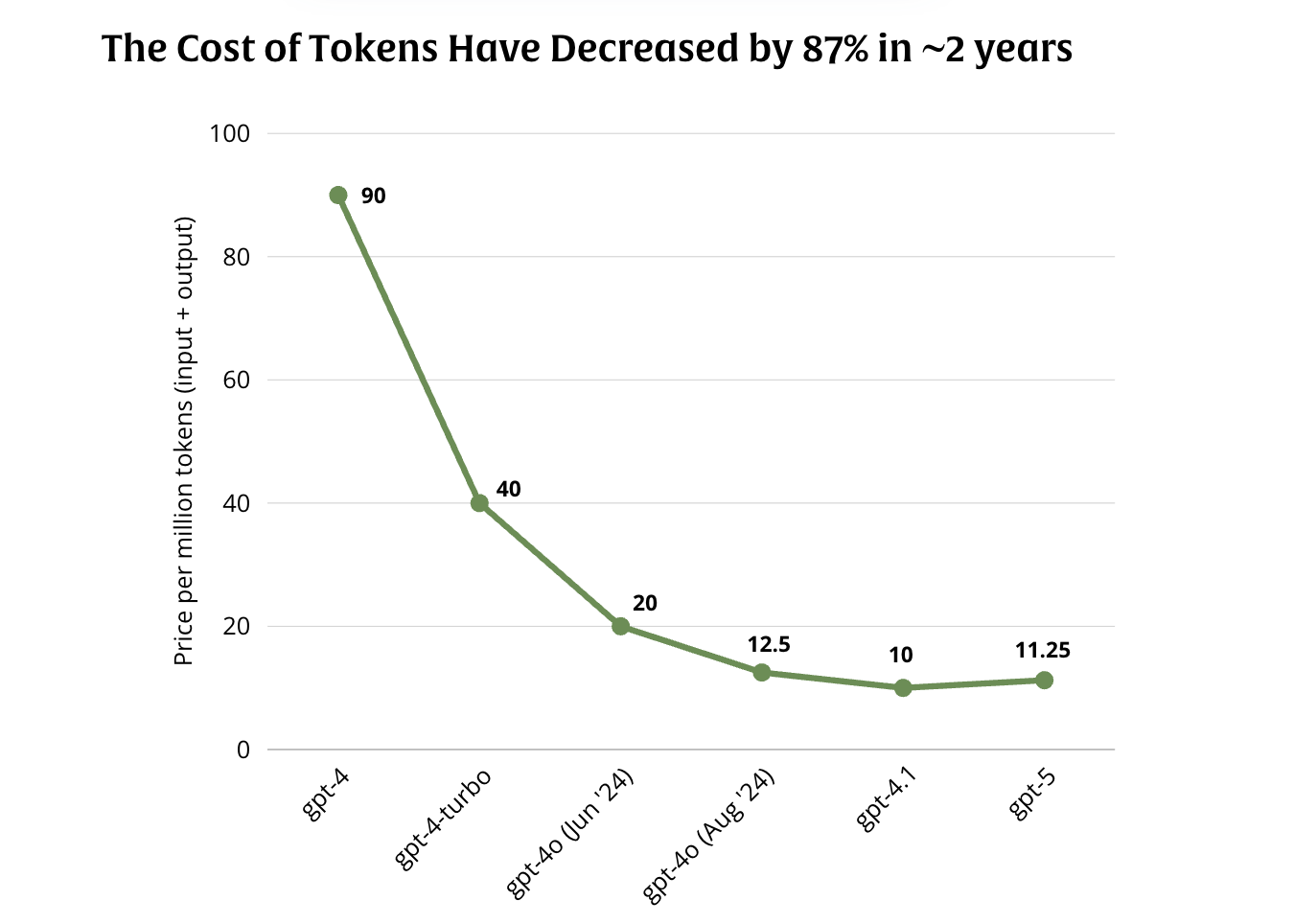

In isolation, if you asked the average founder or VC whether token prices for LLMs at a consistent level of intelligence will go up or down over the next five years, they’d universally say prices will go down. We’ve been seeing this play out since the launch of GPT-3, with token costs dropping 80%+ over the past two years.

But when investors need to make that bet in the context of gross margin improvements, it somehow seems more difficult. Perhaps that’s because folks assume decreasing LLMs costs will reduce prices for AI apps as well, exposing a lack of pricing power across the market. We don’t buy into that assumption. The history of software has shown that exceptional products retain pricing power for a long time, even if underlying input costs go down. Just look at Datadog, which has gone from a developer darling to a source of pricing frustration, all while cloud data storage has become dramatically cheaper.

This pattern isn’t unique to AI. As infrastructure software (where we invest) has moved from delivering bits to running compute, we’ve seen economies of scale emerge in ways that aren’t typical of most SaaS companies, forcing us to update our assumptions of what great looks like at the early stages.

Serverless software effectively moves the cost of idle capacity from the consumer of the service (often a developer) to the serverless provider. It’s built on the assumption that by serving many customers, a serverless provider can run software more efficiently and effectively than any single customer can. In part, this is because the amount of idle capacity the provider needs scales logarithmically rather than linearly with the usage it serves.

"The more workloads we served, the more we understood how to optimize the infrastructure itself,” Marten Abrahamsen told us. “Technologies like Fluid compute emerged directly from this experience. We realized we could dynamically allocate resources based on real usage patterns rather than provisioning for peak capacity. That's the kind of technical unlock you can only achieve by actually running at scale."

At the early stages, serverless companies in our portfolio, like Vercel and Neon* (now part of Databricks), have had to keep machines warm across regions to enable an exceptional experience for their customers. At times this scared off investors who believed the companies were just reselling compute from larger clouds. But when usage ramps, margins can improve very quickly—delivering exceptional developer experience and letting scaling laws kick in.

Scale alone doesn’t tell the whole story. Almost every serverless company we work with has achieved technical unlocks that improved their margins as they’ve served more usage. The core learning for us has been that teams can only become the best in the world at serving a workload by actually doing so at scale. The more databases you host, CDN requests you manage, or inference requests you serve, the more likely you are to find ways to improve.

fal*, the generative media inference cloud, has proven this quickly. By focusing on image and video models, they gained a unique perspective into how those models are run, enabling their stellar engineering team to continually find ways to optimize them. The foundation model labs are showing this at even greater scale. Margins on inference APIs have consistently increased due to both greater scale and engineering improvements that were made possible by OpenAI and Anthropic attracting the best minds in inference with the opportunity to work on the most important inference systems.

Margin expansion in AI doesn’t perfectly map to margin expansion in serverless, but founders and investors can translate many of the same learnings. Past a certain scale, the incremental tokens required to serve another customer goes down, much like the incremental compute costs declined for serverless. AI companies with meaningful usage can do everything from LLM response caching to routing prompts to smaller, fine-tuned models trained on their proprietary datasets. That improves margins even if per token costs remain flat.

With strong product velocity, the best founders define and price units of value that give them independence from direct comparison to input costs. Enterprise Vercel customers don’t view the ROI of the platform solely in terms of the cost of the compute. Vercel’s workflow enabled engineers to ship quickly and not worry about infrastructure, freeing them from days or weeks of human DevOps work. By integrating extraordinary infrastructure and workflow, Vercel’s value equation tipped.

To assess this, we like to focus on “Net New COGS,” which we define as the COGS required to serve Net New ARR added, rather than the COGS of the entire business. With AI companies growing faster than ever, we believe it’s far more important to optimize the cost to serve the next $1M of ARR than the cost to serve the last $1M of ARR.

None of this goes to say that improving margins is easy or that all low-margin companies will eventually turn the tide. Product moats can be very hard to assess—a good rule of thumb is how many really hard things a competitor would need to do to match the product. Just building an exceptional user experience, regardless of underlying economics, is hard enough. Doing so while expanding margins compounds the difficulty.

Bottom Line: In infrastructure software and AI, overoptimizing for margins early can be a mistake. The best opportunities often start with low or negative gross margins, using scale and technical innovation to unlock dramatic improvements over time.

As AI makes it easier for everyone to write code, we want to partner with exceptional entrepreneurs taking the hard path. If you’re building a serverless or token-heavy product with an extreme focus on serving a growing customer need, we’d love to chat.